TL;DR

- Insight drives talent discovery and oversight ensures fairness.

- The model prevents AI bias and legal penalties.

- Human-led automation is vital for executive and niche roles.

- Regulations require the audit of automated hiring systems.

The modern recruitment landscape is facing a crisis of confidence as organizations rush to automate their talent pipelines. Speed is a massive advantage but the lack of transparency in automated tools has led many candidates to feel like they are being judged by an uncaring machine. Without a clear plan for hiring insight & oversight, companies can carry hidden bias forward and push away the talent they depend on to grow.

The solution lies in creating a balanced ecosystem where data driven insights meet human-led governance. Businesses can use AI for speed while still protecting fairness and the human care needed for long term hires and they can do this by putting hiring insight & oversight first.

Why Trust Matters in Modern Hiring

Trust is the currency of the modern labor market. According to recent recruitment technology news today, nearly 66% of U.S. adults say they would not apply for a job if they knew AI was making the final hiring decision without human intervention.

When candidates trust that a process is fair, they are more engaged, leading to higher-quality applications and better brand reputation. As about 92% of companies plan to spend on AI tools over the next three years, employers who maintain a real human connection will stand out the most.

What Is Hiring Insight and Oversight?

In the context of recruitment, “insight” refers to the deep, data-backed understanding of candidate potential, market trends, and workforce planning and analytics. It is about knowing who is the best fit based on more than just keywords.

Oversight acts as a safety guard guided by people, not just systems. It keeps humans involved in reviewing automated choices, so they match company values and legal rules. When paired with insight, hiring insight & oversight work together to keep hiring fast while staying fair, careful, and grounded in ethical hiring.

Risks of AI Hiring Without Oversight

Relying solely on algorithms is a high-stakes gamble. Without proper hiring insight & oversight, AI tools can inadvertently “learn” to favor specific demographics based on historical data flaws.

For example, the EEOC recently filed its first AI-related discrimination lawsuit, resulting in a $365,000 settlement after an automated tool filtered candidates by age. Beyond legal fees, “dark” AI hiring, where decisions are made in a vacuum, can lead to a “homogenized” workforce, killing the diversity of thought that drives innovation.

How Insight Improves Hiring Decisions

True insight goes beyond basic resume scanning. It allows for a more nuanced talent assessment with recruiter software, evaluating soft skills, cultural alignment, and growth potential.

When looking at AI recruiting agents’ effectiveness in niche roles, data shows that advanced agents can identify candidates for specialized fields like quantum computing or cybersecurity by analyzing their contributions to open-source projects or niche hackathons, details that a human might miss in a pile of thousands of resumes.

How Oversight Protects Organizations

Oversight serves as a legal and ethical safety net for the organization. It makes sure each automated suggestion is reviewed by a skilled professional who understands the role in full. This matters even more in the executive hiring process automation balance, where leadership decisions carry high risk and cannot rely on a programmed match alone.

Oversight means recruiters routinely review hiring results to see if any group is being filtered out unfairly, so changes can be made early before a trend takes hold.

Compliance Requirements AI Hiring Must Meet

Rules around hiring technology have moved from watching to enforcing and AI recruitment compliance is no longer optional but required.

- NYC Local Law 144 makes yearly third party bias reviews mandatory for any tool used to automate hiring decisions.

- Colorado SB205, enacted in 2025, requires employers to conduct impact reviews and to offer a human review step when an AI decision results in a negative outcome.

- EEOC Guidance says companies remain responsible for unfair hiring outcomes even when vendors build the tool.

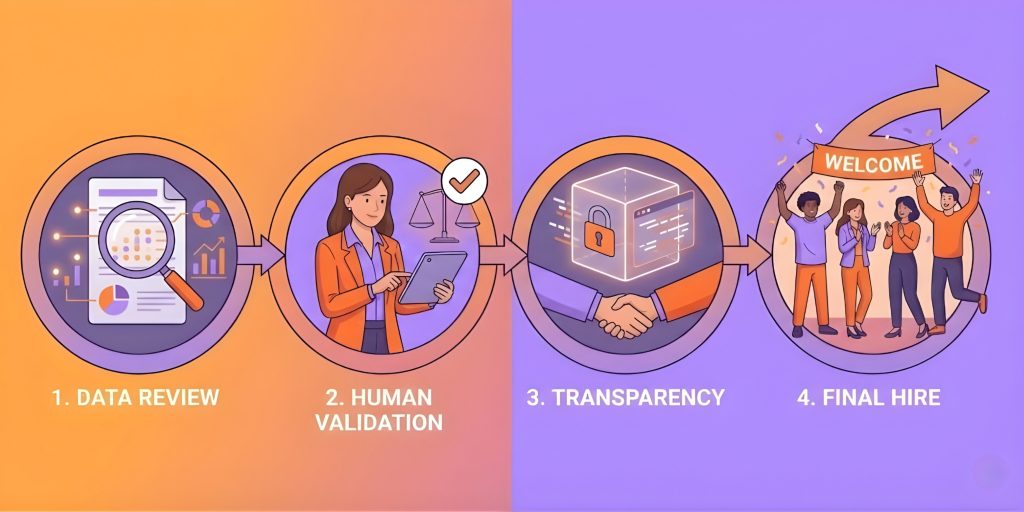

Building a Trust-First Hiring System (Step-by-Step)

Audit Your Tech Stack

Take a close look at every hiring tool your team is using and reach out to vendors for their latest bias reports. Doing this early helps you catch blind spots and see how each tool is shaping real hiring choices.

Establish Human in the Loop

Do not allow software to make final calls on its own. Give recruiters the chance to review close matches so good candidates are not filtered out too soon.

Prioritize Transparency

Be clear with candidates about how technology is used in your hiring process. Simple honesty builds trust and can encourage more people to apply.

Integrate Data Sources

Use a centralized platform to ensure your hiring insight & oversight data isn’t fragmented across different systems.

Train Your Team

Educate hiring managers on interpreting AI suggestions without treating them as absolute truths.

Conclusion

The purpose of hiring technology is not to remove recruiters but to support them with clearer information and more time to focus on people. When hiring insight & oversight guide the process, teams can hire faster without losing the human side of recruiting. Do not wait for a compliance review to force change. Start building a trust first hiring system now to attract the right talent in the future.