TL;DR

- Ethical AI use protects hiring teams from bias and keeps assessments fair.

- AI scoring can create problems when rules are unclear or untested.

- With audits, data checks, and human review, teams can build safe workflows.

- Ethical AI creates a smoother experience for job seekers and reduces risk.

- Recruiters must understand how AI works during AI candidate pre-vetting.

Many teams want faster screening, so they turn to AI for help. The problem is that some scoring tools can learn from messy data. When this happens, the system may favor one group without anyone noticing. This hurts candidates, damages trust, and can even trigger legal trouble. Ethical AI Use in Talent Assessment Software matters because the wrong tool can create bias instead of removing it.

The good news is that companies can still use AI safely. With clear rules, data checks, and human review, teams can build a fair process. This blog explains how Ethical AI Use in Talent Assessment Software works, what to watch for, and how to build a safer workflow. Many of these ideas fit well with broader shifts in hiring technology, especially as automation continues to grow across HR teams.

What Ethical AI Actually Means in Talent Assessment

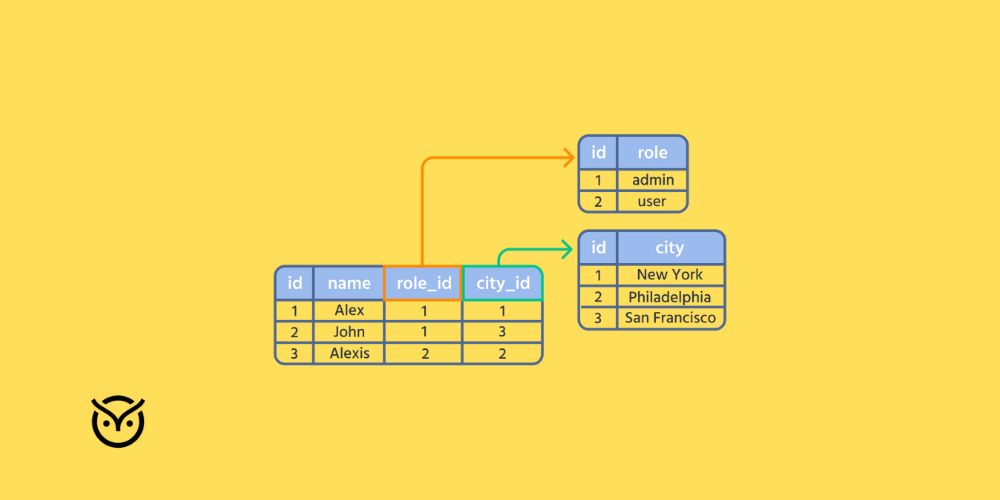

Ethical AI Use in Talent Assessment Software means using tools that score candidates fairly, explain how results are built, and protect people from hidden risks. It also means checking the AI often so it does not drift into biased patterns.

A fair system should meet three basic ideas

- It must test skills needed for the job.

- It must score all individuals by the same rules.

- It must not harm any protected group.

A recent report from the US Government Accountability Office shared that many AI hiring tools still lack full transparency for users who depend on them.

This is why Ethical AI Use in Talent Assessment Software must include clear documentation, open scoring rules, and ongoing monitoring. Without this, teams risk using tools they do not fully understand.

Ethical AI also means giving candidates clarity. When people know how the system works, they feel calmer and more engaged. This helps build trust during the hiring process. These themes connect closely with the rising importance of technical AI skills and the shift in how companies hire AI engineers.

The Risks of Using AI in Candidate Scoring and How to Avoid Them

AI is fast, but it can make mistakes that humans do not see right away. These risks become bigger when teams depend too much on AI screening tools. Ethical AI Use in Talent Assessment Software reduces this risk by helping teams spot unfair patterns early.

Risk 1. Bias from training data

If the tool learns from old hiring data, it may repeat old patterns. A study from MIT showed that some AI models scored darker skinned women incorrectly at higher rates than lighter skinned men.

How to avoid it

Use clean data, run audits, and remove any fields that do not relate to job skills.

Risk 3. Over reliance on automation

AI cannot read emotions or understand context. If recruiters depend on it alone, they risk missing strong candidates.

How to avoid it

Use AI as support, not the final decision maker. Human review should come after the score.

Risk 4. Poor handling of sensitive traits

AI systems can pick up signs of age, gender, or disability even when not told directly. This happens through patterns in writing, pacing, or voice.

How to avoid it

Ask for bias testing results from the vendor. Use scoring models that focus only on job tasks.

Risk 5. Unsafe pre-vetting

Some teams use AI for early filtering, which creates fast results but also raises fairness concerns. This is why asking questions like Is it safe to use AI for candidate scoring in AI candidate pre-vetting becomes important.

These concerns are becoming even more common as the demand for AI talent continues to climb, which has created new pressure on hiring teams.

Building an Ethical AI Workflow in Talent Assessment

Ethical AI Use in Talent Assessment Software works best when the entire workflow supports fairness from start to finish.

Step 1. Start with job related skills

List what the job needs. Build assessments around tasks, not traits. This reduces bias because the AI focuses on what matters.

Step 2. Use mixed data checks

Run audits every few months to see how different groups score. If one group drops far below the others, review the scoring rules right away.

Step 3. Keep humans in the loop

AI gives a score, but the recruiter makes the final decision. This balance keeps the process fair and safe.

Step 4. Review vendor documentation

Ethical AI Use in Talent Assessment Software depends on transparency. Vendors should share

- validation studies

- scoring details

- proof of fairness checks

If the vendor refuses, the tool may not be safe.

Step 5. Give clear instructions to candidates

Candidates need to know what the test measures. When instructions are simple, test results become steadier and more accurate.

Step 6. Set up an appeal path

Candidates should have a way to ask questions if they think a score was unfair. This extra layer shows respect and protects the company.

Step 7. Save records for audits

Hiring teams should save scoring logs and audit results. This helps prove fairness if questions come up later.

A survey from Pew Research found that 66 percent of Americans want more rules on how companies use AI for decision making.

The Future of Ethical AI in Hiring

AI in hiring will keep growing, but so will the need for safe rules. Ethical AI Use in Talent Assessment Software will focus more on skill testing instead of personality or behavior predictions. This shift reduces bias because it looks at what people can do, not who they are.

Future tools will likely offer:

- real-time bias alerts

- clearer score explanations

- stronger privacy controls

- more inclusive testing formats

More countries are already writing laws about AI hiring. In the United States, the state of New York now requires bias audits for automated hiring tools used in the city.

This means Ethical AI Use in Talent Assessment Software will not just be helpful. It will become a requirement.

AI will never replace human judgment, but it can help teams hire faster and fairer when used responsibly. The companies that learn this early will have stronger and more diverse teams in the long run.

Conclusion

Ethical AI Use in Talent Assessment Software helps companies stay fair while still moving quickly. AI can support hiring decisions, but only when used with clear scoring, regular audits, and human review. With the right workflow, companies can avoid bias, improve trust, and create a better experience for both candidates and recruiters. Ethical AI is not just a tool. It is a commitment to hiring that respects every person equally.

FAQs

Q1. Should AI be used alone to make hiring decisions?

No. AI can support the process, but final decisions should be made by a human who can understand context.

Q2. How can companies verify whether an AI hiring tool is ethical?

Check validation studies, fairness audits, scoring explanations, and vendor transparency.

Q3. What responsibilities do recruiters have when using AI?

Recruiters must understand how the tool works, watch for bias, and ensure each score relates to real job skills.

Q4. Can ethical AI improve the candidate experience?

Yes. Clear instructions, skill-based scoring, and fair rules help reduce stress and create a smoother process for candidates.