TL;DR

- Adverse impact occurs when hiring methods disproportionately harm certain groups.

- Some hiring rules seem equal on the surface, but the results tell a different story.

- The four-fifths rule gives a quick way to check if one group is being left behind.

- Trouble often starts with tests or filters that do not truly reflect the job.

- Regular reviews of hiring steps help you avoid unfair treatment toward any group.

You know those job ads that say “everyone can apply”? Then you look at who gets hired, and it is always the same kind of person again. It feels like the door is open, but only for a few people. Some rules look harmless, and yet they quietly push certain groups aside. Learning what is adverse impact will make you see where people are being held back, even when no one tried to do anything wrong.

In this blog, you will learn what adverse impact means in recruitment. You will learn how to spot it, what often causes it, and how you can avoid it so that your hiring feels fair and your team really is open to everyone.

What Is Adverse Impact in Recruitment?

When you talk about adverse impact (also referred to by some as “disparate impact”), you mean a situation where a hiring or selection method seems neutral, but ends up having a disproportionately negative effect on a group defined by race, gender, age, or another protected characteristic.

In simpler terms, you might apply the same test or screening rule to every candidate. But if that rule tends to eliminate far more people from one group than from another, even without intent, that’s a problem. That’s the adverse impact definition in the context of recruitment.

Under law (in many countries such as the US), such practices can be challenged if they’re not job-related or necessary for safe, fair performance.

Spot the Hidden Adverse Impact

Two hiring scenarios look fine at first glance. Pick the one where the outcome suggests hidden adverse impact against women.

How to Identify Adverse Impact

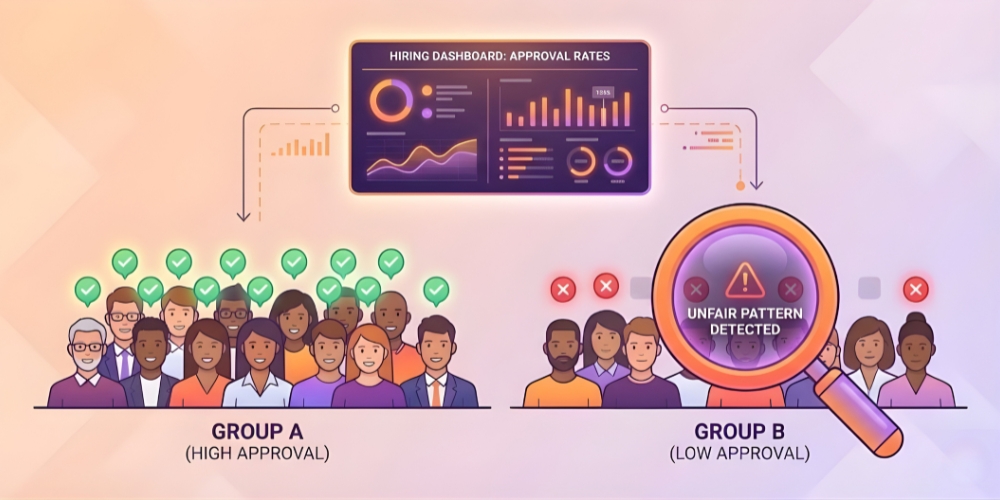

One well-known method for spotting adverse impact is the 4/5 rule adverse impact (also called “four-fifths rule”). According to this rule, if the selection rate for a group is less than 80% of the rate for the group with the highest selection rate, that signals a possible adverse impact.

For example, imagine 100 men and 100 women apply for a job. If 50 men and only 35 women are selected, the women’s selection rate is 35/50 = 70% which is under the 80% threshold, which hints at an adverse impact.

But identifying adverse impact isn’t always about simple math. Sometimes organizations must examine whether the process is “job-related and consistent with business necessity.”

Also, recent research shows hiring discrimination remains widespread across many protected grounds, including age, disability, and appearance, not just race or gender.

Common Causes of Adverse Impact

Several common factors can have an adverse impact, even if unintentional.

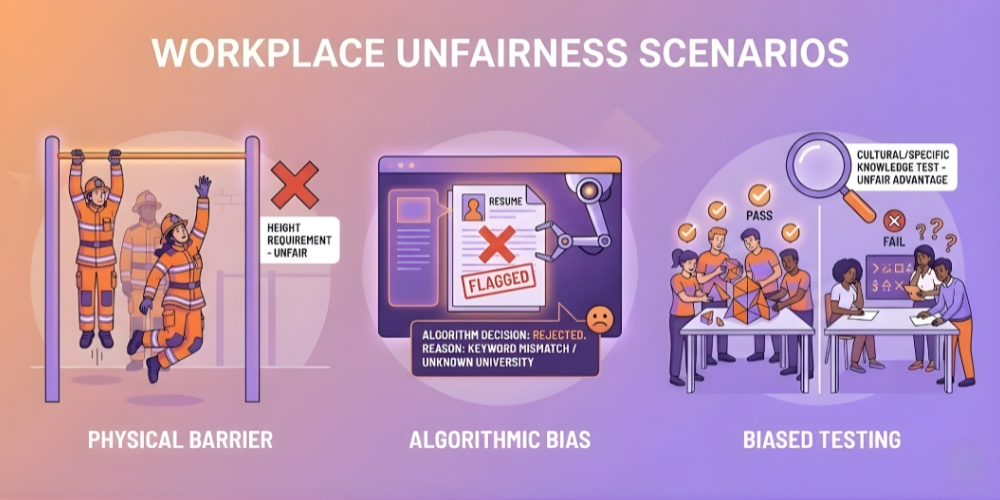

- Biased or irrelevant tests and assessments: Sometimes companies use general aptitude or skill tests that don’t truly reflect job requirements. For instance, in a field study, a hiring rate of 79% for women compared with men was flagged as evidence of adverse impact.

- Strict screening criteria that disadvantage certain groups can cause unfair results. For example, height or weight requirements may negatively affect female applicants, and physical ability tests may screen out older applicants disproportionately.

- Background checks or credit checks that screen out applicants with certain histories. Such checks may disproportionately disqualify applicants from racial or economic minority groups.

- Over-reliance on qualification filters (like certain educational credentials, years of work experience, or language proficiency) can disadvantage underrepresented groups who may lack access to similar opportunities.

- Structural or systemic inequalities outside hiring can also play a role. Even a “neutral” hiring test can reinforce existing inequalities if groups have unequal access to prior education, training, or resources.

When organizations rely on automated tools or AI to screen candidates without verifying fairness, it becomes essential to use EEOC-compliant assessments and to remain aware of online assessment myths in AI hiring that claim neutrality but still embed bias.

Examples of Adverse Impact in Hiring

Here are some situations where adverse impact discrimination can quietly show up:

Skills tests filter out women

A tech company gives a logic-heavy game to screen junior roles. Even though the test looks neutral, the pass rate for women ends up much lower. The test did not truly measure the job’s daily tasks, so it adversely affected one group unfairly.

Height requirements in public safety jobs

Some fire and security roles have old height rules. These rules define adverse impact because they push out many female applicants who could still safely perform the job.

Over-reliance on degree requirements

Requiring a specific university degree screens out skilled workers from less privileged communities. The rule has a definition of negative impact because educational access is not equal everywhere.

AI screening issues

If resume screening tech was trained on biased historical hiring data, it may keep choosing candidates with similar profiles to existing staff, creating unfair filtering by race, age or gender. This shows why checking adverse impact vs disparate impact matters in audits.

How to Prevent Adverse Impact

Here is what companies can do to define adverse effects before they become a legal problem:

Hire based on skills that truly matter

Review every requirement. If it is not tied to daily work or safety, remove it.

Validate assessments

Check that all tests are job-related. Run fairness checks often, especially after hiring new roles or opening new markets.

Use structured interviews

Ask the same questions to everyone. Score responses using clear rubrics. This reduces hidden preferences.

Track your selection patterns

Check who applies versus who actually gets hired. If a rule causes a drop in success rates for a protected group, then you have to revisit that rule.

Educate your hiring team

Recruiters and managers must understand what does adverse effect mean and how discrimination can occur without intent. Awareness drives accountability.

And most importantly, consider tools that are already checked for fairness and AI recruitment compliance instead of building risky screening from scratch.

The Role of AI in Reducing Adverse Impact

AI can help improve fairness when used with care, not blind trust

Better pattern spotting

AI highlights trends humans miss, such as small group-based differences in pass rates. This is crucial for spotting early signs of adverse impact in hiring.

More consistent scoring

AI can review tests, short answers, or job simulations with the same standard for everyone. This reduces opinion-based filtering.

Checking results with real data

AI can keep an eye on hiring decisions in the background and warn teams early if one group starts slipping through the cracks. This helps maintain EEOC compliant assessments and makes fairness a normal part of hiring instead of something that is only checked once in a while.

Conclusion

Fair hiring is about more than saying you value diversity. It is about seeing where the process might unfairly hold people back. Understanding what does adversely affected mean in recruitment gives you a clearer path to improve every part of your candidate journey.

When you check your rules, measure outcomes, and make changes early, you protect candidates, strengthen trust, and build teams that truly reflect the talent around you. Fair systems help everyone grow.